Recently, Cory Dansfeldt's blogpost, titled "I block ads" went viral on Hacker News.

I find myself doing much the same things he does.

How I block ads

I use Safari extensions or plugins on macOS, iPadOS and iOS like: Wipr, 1Blocker, Hush and Vinegar. The reason I paid for these apps is that they make the browsing experience so much more enjoyable, less cluttered and faster. Besides all this, I also block ads with the help of NextDNS. This has as an added bonus that whenever visitors are on my guest Wi-Fi, their TikTok and Instagram and such, are also blocked, which has the added benefit of a truly undisturbed and cosy evening.

Ads are malware?

All this attention grabbing is an attack on the mind, a focus sapping drain on our happiness. That is probably why I found this comment by user "Nextgrid" very insightful:

There is no difference between ads and malware - both are code that uses your machine to make it do something undesirable, and often stalk you (modern adtech is often way more powerful than the spyware of the old days) in addition. It is your absolute right to block all malicious code.

Operating systems mostly wisened up to their earlier vulnerabilities and patched most of the avenues malicious code could load itself on your machine. Browsers countered the early abuses of the pop-up window feature by blocking them and nobody complained. Operating systems and browsers should include ad- and spyware-blocking by default just like they block conventional binary viruses.

Of course, one problem with blocking ads is that it's really hard to distinguish them from normal text or images on websites. So out-of-the-box blocking will always have to rely on blocklists like the ones that uBlock Origin uses. These lists depend on knowing the bad actors in this space, and the domain names that they serve ads with. Blocking all JavaScript will stop some trackers, but it will also break many features of websites.

Google makes it more difficult

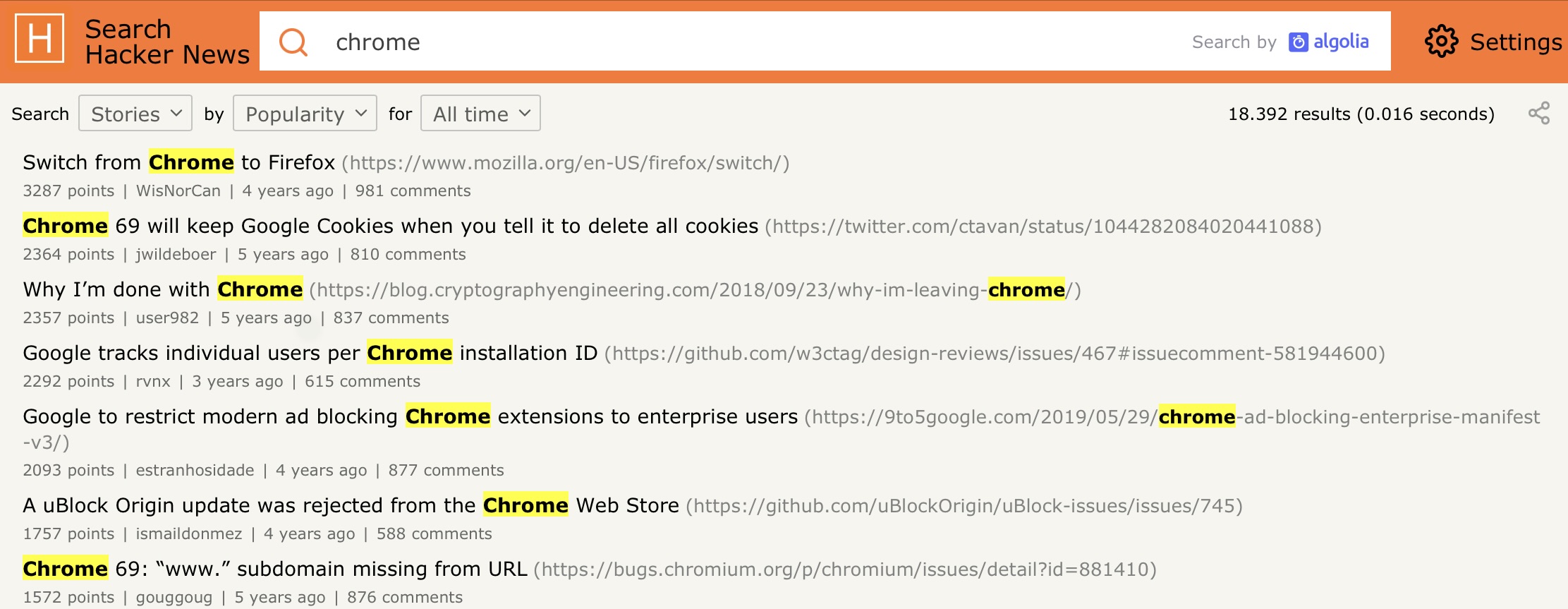

This will always be a cat and mouse game. Google upped the antes by making a fairly good web browser, Chrome, and now that more than 50% of all internet traffic globally goes through this browser, they are making it harder to block ads in it, as several headlines of recent well illustrate. Well played, Google.

Are all ads evil?

An interesting opposing view was that of user "jader201", who wrote:

In 2004, I started a small website on the side that had no business value at all. It was just a small community of video game fans (specifically Animal Crossing), and pretty quickly, the costs outgrew what I was willing to afford on my own.

So I opened up donations. Unfortunately that didn’t get me very far, so I eventually— and begrudgingly — added ads. I was very intentional about keeping them as unobtrusive as possible: just some banner ads at the top and bottom of each thread and forum, and a square next to the first unread comment. I hated pop-ups (the kind that opened in a new window — very common at that time), and I didn’t have them on any other page on the site (just forums).

This gained me about 10x the revenue I got from donations alone, and was able to scale the site’s hardware, allowing me to keep the site up and running for nearly 20 years, and provided some modest passive income for a time. (I just recently sold it to someone else, but it’s still up and running.)

My point is, that wouldn’t have been possible without ads.

This is an interesting point that I also run into. It's almost impossible to live from writing, unless you are very well known. I hear Jason Kottke can live from the income generated by his blog, but he started 8 years earlier than me, and he puts way more time and effort into his blog than I ever did.

I like to write about technology, photography, financial well-being, and generally about whatever I fancy that day. But I don't even dream of making a living of my musings. In fact, very recently, I've signed up for the Amazon Affiliated program again. I think I tried it in 2017 but didn't generate enough clicks, so I was kicked out of the program. Also, there are now Google banners on my blogs. They don't generate any money yet, but a guy's got to dream, right?

Ads are a lose-lose outcome

The reason I resisted having Amazon affiliate links or banners on my blogs and websites for so long is a simple one: I want to practise what I preach. I don't like to see ads on websites; hence, I block them. I don't mind affiliate links so much, but they are not ideal either.

Interesting to me was the many comments on Hacker News focus on not how they are blocking ads, but why. It seems to me that many of us, technologically inclined folk, feel deep down inside that although we have every right to block ads, we are bereaving hard-working bloggers and maintainers of small forums and community websites of the money they so desperately need to keep afloat financially.

How to pay makers/writers/authors/singers directly?

One US Dollar

How to thank the creator of the link, as well as the creator of the content?

This is a great way to pay the creator of the content. But how do you pay the person who pointed you to the interesting link? Do you go back in your browser to tip them? Do you even think about that, every? I guess not. Wouldn't it be great if it was a convention to write links as follows:

<p>My favourite website is <a tip="tip-to-jan@janromme.com" href="https://somesite.com">this website</a>.</p>

There are already other kinds of data that we can put in an HTML link. For example, there are a bunch of relationship statuses (like author, source, external) that we can put in the REL attribute. What if there was a TIP attribute as well, where someone could simply add their lightning address to receive tips for the trouble of linking you to this useful content?

Maybe the internet wouldn't be so riddled with advertisements then 🤔

You can discuss this blog post on Hacker News or Stacker News.